Dynamic Object SLAM with Dense Optical Flow

2022.5-2023.5

Supervisor: Dr. Xingxing Zuo, Prof. Dr. Stefan Leutenegger

Technical University of Munich

Abstract

We present a novel method for dynamic SLAM that combines classical optimization techniques with deep learning to achieve high accuracy and robustness in estimating camera poses, dynamic object poses, and scene depth in dynamic scenes. The proposed approach leverages a state-of-the-art optical flow estimator with a GRU update operator and instance segmentation to differentiate the dynamic regions from the static background and individually track the poses of dynamic objects. To optimize both camera and object poses, a differentiable dynamic dense bundle adjustment layer is introduced, allowing for joint global refinement of camera poses, dynamic object poses, and depth maps using a wider range of information from the entire image. Extensive experiments on the Virtual KITTI dataset demonstrate the great performance of the proposed approach, even in the presence of severe occlusions and limited constraints.

Overview

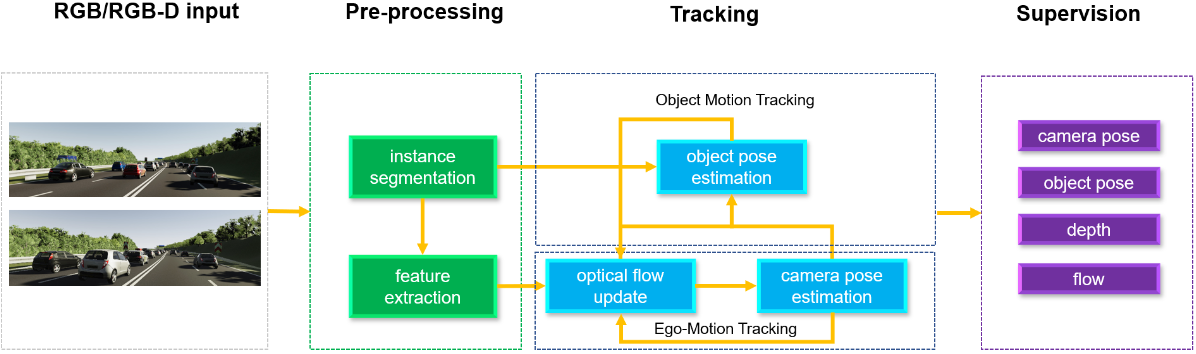

The input images are passed through an iterative optical flow estimator to determine the correspondence between frames, and Mask-RCNN is used to segment static and dynamic regions. We then proceed to estimate the camera pose and dynamic object pose through a differentiable dynamic bundle adjustment layer given the estimated correspondence, which is solved using Gauss-Newton optimization. This layer produces depth and pose residuals to maximize the compatibility between the updated correspondence induced by depth and pose and the correspondence estimated by the network. The updated correspondence is then fed back into the optical flow estimator until the depth, pose, and correspondence converge.

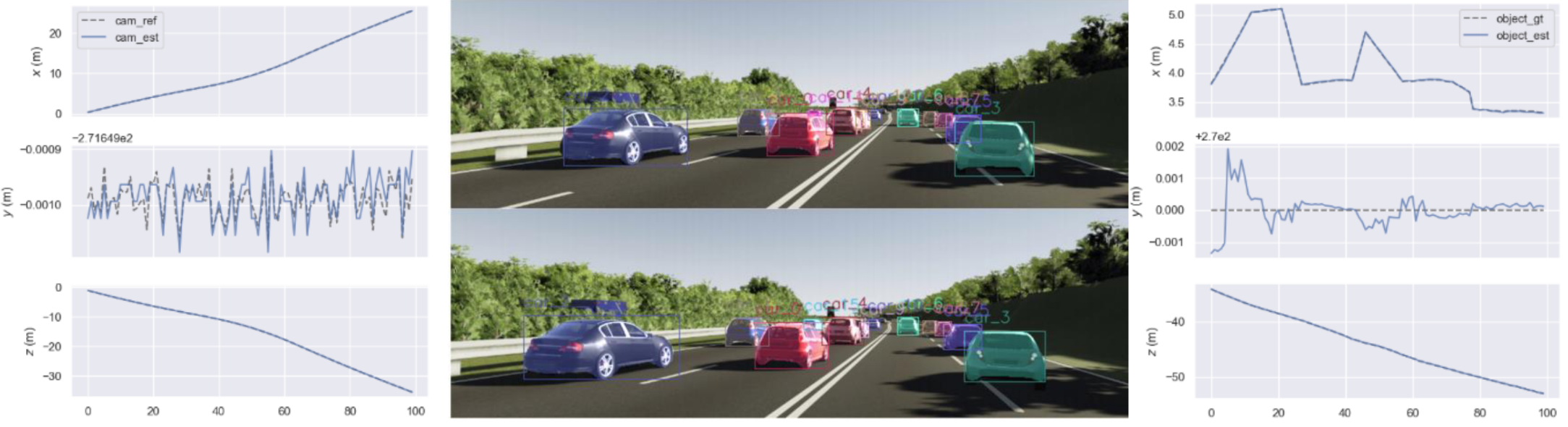

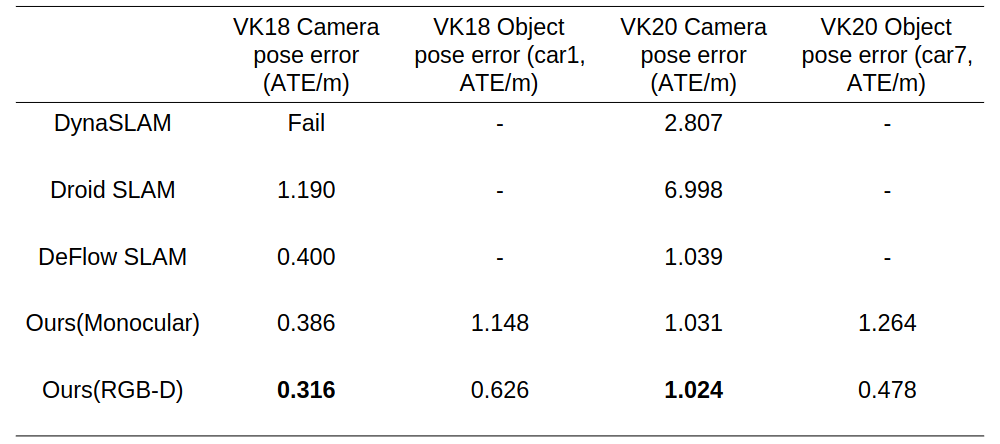

Experiment on Virtual KITTI dataset

The proposed method utilizes information from dynamic areas that have been overlooked in prior research, and it achieves enhanced accuracy in camera pose estimation in monocular setting.

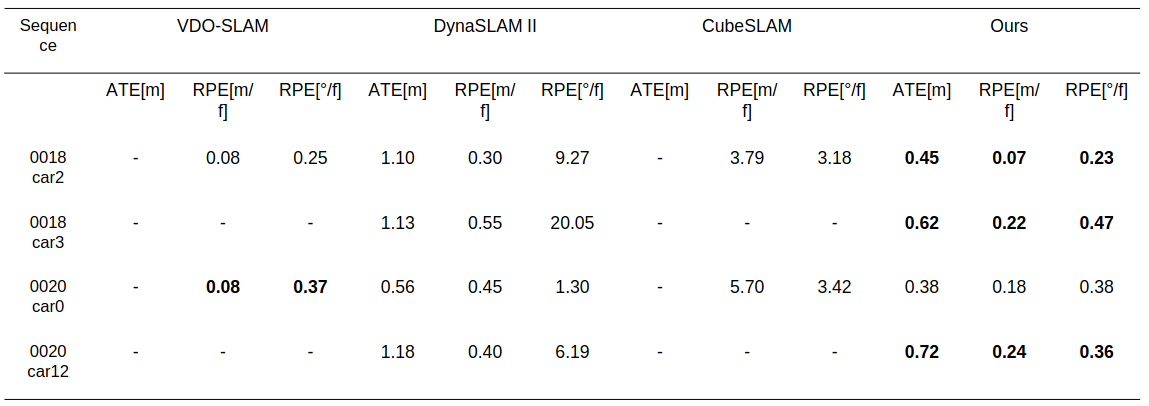

Experiment on KITTI Tracking dataset

When comparing the results of several selected object samples from previous studies, it can be observed that the estimation of dynamic object poses is significantly improved relative to DynaSLAM II and CubeSLAM.

[Presentation] [code]